What is an AI chatbot? Examples with zero and few shot prompts

Overview

Introduction

There’s no doubt we’re living in an exciting machinery era!

Artificial Intelligence (AI) capabilities have risen exponentially in recent years to help humans and automate some of its tasks.

In this article, you’ll briefly understand one application of AI in modern products: the chatbot.

Simply speaking, what is a chatbot?

A chatbot is a machine that tries to simulate a conversation like a real human being. Moreover, “conversation” applies to text and voice messages, although most chatbots are designed for textual interaction.

There are various types of chatbots available. For instance, Markov Chain Chatbots predict the next chat state based solely on the current state and a given probability. The definition of state depends on the chatbot's implementation.

One example is when you start typing words into your phone’s text apps, and the machine tries to predict the next word. In that case, the state is the current word (or a set of words) we type in. For instance, If I type “Let’s order an” in my text apps, it’ll probably predict the next word as “pizza!” since the state “order an” in my phone has a probability of going to “pizza”.

Markov Chain chatbots are the simplest types of machine chat simulation. Moving forward in time, we have large language models (LLMs). Those are a giant and more intelligent Markov Chain version. Consequently, LLM chatbots also try to predict the conversation's next state, but at a higher efficiency.

The LLM chatbot prompt message and roles

The basic functioning of a chatbot develops around the prompt and the roles of its messages.

A prompt is how the user interacts with the machine. In other words, it’s the user input to the LLM. That input can come in various formats like text, audio, image, etc., depending on the purpose of the LLM.

In the case of chatbots, that input is typically text, like if the user is trying to get answers to his questions. For example, a user complains to the chatbot about a defective product. Additionally, the input can also come in audio or image formats. For instance, imagine a chatbot requiring additional image information as proof to make a decision.

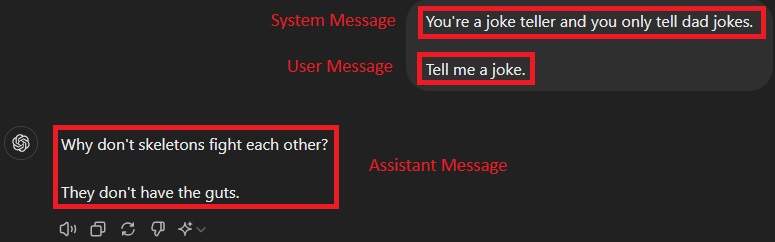

Every prompt has a set of messages with three possible roles:

- User message: This is the message that the end user types into the console.

- System message: This message augments the prompt with guidelines or other contextual information.

- Assistant message: This is the message generated by the chatbot.

The system message is where we, as application developers, can enhance the prompt by providing instructions and context that the user message typically lacks.

Zero-shot prompting

Zero-shot prompting is a prompt engineering technique for communicating effectively with LLMs. By “zero-shooting,” we mean that no examples are given to the LLM. We rely primarily on the built-in LLM knowledge to answer the prompt questions.

That technique makes the LLM less sensitive to additional training data, improving its adaptability. In other words, you don’t need to be too specific or provide additional context to the LLM. You simply ask for a task.

For instance:

1Classify the following `text` into one of the categories.

2

3text: The movie was fantastic, and I loved every moment.

4

5categories:

6- Positive

7- Negative

8- Neutral

Without any prior information or conversational history, GPT-3 responds with the following:

1The text "The movie was fantastic, and I loved every moment." falls under the category:

2

3- Positive

The LLM correctly responds to the zero-shot prompt because it asks for general knowledge, i.e., sentiment analysis. It also responds to the prompt concisely because we limited its options to 4 categories.

Other well-suited zero-shot use cases are translating a piece of text and asserting common-sense information (like when the Roman Empire fell)—pretty much anything that doesn’t demand extra examples or context.

If zero-shot prompt doesn’t work properly, we can use more informational techniques, such as few-shot prompting.

Few-shot prompting

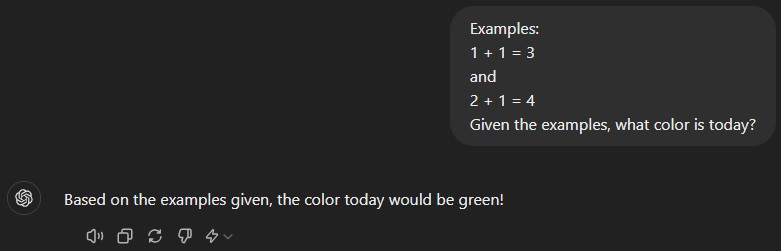

In contrast to zero-shot prompts, few-shot prompts give examples aiming to improve the task execution correctness by the LLM.

The few-shot technique mixes the LLM's built-in knowledge with the additional information in the prompt (typically provided as a system message), making the LLM more sensitive to the details in the prompt.

Let’s look at one example:

1You’re a text classifier. Text can be classified into one of the categories:

2

3categories:

4- Positive

5- Negative

6- Neutral

7

8Examples:

9

10Text: The movie was fantastic, and I loved every moment.

11Target: Positive

12

13Text: I hate not being in the front row in a concert.

14Target: Negative

15

16Using the same logic as the examples above, classify the following text:

17

18Text: I love pizza!

19Response:

In the prompt above, we provide examples of system messages on how to answer text classification tasks. Each example is a “shot” in few-shot prompting. Hence, we used a 2-shot prompt to augment the prompt with 2 examples. The answer is:

Note that in the previous prompt, we taught correctly the LLM how to answer each classification task via examples. In that case, the response was correct. However, let’s see what happens if we provide incorrect examples:

1You’re a text classifier. Text can be classified into one of the categories:

2

3categories:

4- Positive

5- Negative

6- Neutral

7

8Examples:

9

10Text: The movie was fantastic, and I loved every moment.

11Target: Negative

12

13Text: I hate not being in the front row in a concert.

14Target: Positive

15

16Using the same logic as the examples above, classify the following text:

17

18Text: I love pizza!

19Response:

The LLM answers with:

That response is incorrect, as we know that “I love pizza!” is a totally positive phrase, not a negative. However, we taught the LLM that the phrase is negative and asked it to complete the task using the examples from the few-shot prompt. Thus, that’s what the LLM will follow.

Therefore, it’s essential to create few-shot prompts more carefully than zero-shot prompts since we provide some source of truth as examples and those examples might be wrong.

Conclusion

In this brief article, you’ve got an idea of what a chatbot is. They’re basically predictors of the next state based on the current state.

You’ve also learned how to apply the zero-shot and few-shot prompt techniques to get better answers.